Here's an example of running a JChav, using

digg.com as the subject of the test.

Produce A JMeter Script

The first step is to produce a JMeter script that will exercise the site you wish to test.

The Jakarta JMeter web pages describe how to do this in depth.

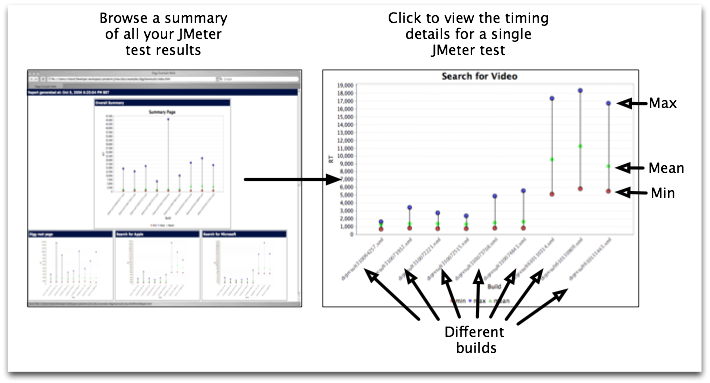

JChav produces the top level set of images based upon the labels that you put into the JMeter configuration file.

So where possible give your JMeter tasks meaningful names. This is especially important if you are using the http proxy tool built into JMeter to make test generation easy.

JChav will do its best to turn URLs into something meaningful, but if you take the trouble to set the name on the task the resulting pages will be better.

Make sure that the test plan you have created is running as you expect it to inside the JMeter workbench. When you are happy with this script save the script as a jmx file.

We have included a small example called digwalk.jmx which performs a series of simple calls to the Digg web site.

If you'd rather jump in a use JMeter to test your own site, we've provided a localhost.jmx JMeter test which simply requests the home page of a site running on your localhost on port 80. It's a template to get you up and running quickly. Also check out the examples that ship with JMeter in their docs/demos/ directory.

Running our script from ant

JChav includes build-example.xml which is an Ant build file set up to run the digg.com tests and produce a JChav report. If you go into the jchav/docs/examples you'll see it there. Run it with ant -f build-example.xml.

If you're logged in to your computer as the user jimbo you can customize the build for your machine by creating a file called jchav/docs/examples/jimbo.properties which can contain something along these lines:

jmeter.install.dir=/Applications/jakarta-jmeter-2.2

jchav.libs.dir=/Users/jimbo/jchav-1_0_0

Alternatively, if you don't want to create a user.properties file, go into the docs/examples directory edit the two lines in build.properties to let JChav know where it's installed and where to find Jmeter. Then, from the docs/exampes directory, run ant -f build-example.xml. It'll take a while to run as it samples the Digg.com web site. When it's over open digjchavresults/index.html in your web browser.

How the script works

The good folks over at Programmer Planet have produced an ant task for running JMeter called Jmeter Ant Task, which is included in recent JMeter distributions in the jmeter/extras folder.

The job of this ant task is to run our tests and produce an output file containing the results. But it is important that we make sure that we produce a different output file each time we perform our tests.

By default the task appends information to one file, but we want want file per run so that we can judge if the changes we have made to the software have improved or degraded performance over time.

To do this simply create a unique build id for each build performed. If you are using a continuous integration tool such as CruiseControl then use the build id for the build, otherwise use something like a timestamp.

eg.

<tstamp>

<format property="build.id" pattern="dMMhhmmss" locale="en"/>

</tstamp>

<jmeter

jmeterhome="c:\jakarta-jmeter-1.8.1"

testplan="${basedir}/test/scripts/digwalk.jmx"

resultlog="${basedir}/test/results/digresult{$build.id}.xml"

/>

Everytime we run the jmeter target we will create a file in the ${basedir}/test/results/ directory. This is the directory that we pass to the JChav task to produce our performance graphs.

Adding JChav to the build

Initially you need to ensure that the jchav task is available for your ant build. The following taskdef will import the task :

<taskdef name="jchav" classname="com.googlecode.jchav.ant.JChavTask">

<classpath>

<fileset dir="${jchav.libs.dir}/">

<include name="**/*.jar"/>

</fileset>

</classpath>

</taskdef>

Once the task is available you simply need to add the task to the build. It requires two attributes. The first is srcdir which is the directory that contains all the logs from the JMeter runs.i.e. the directory you chose to write the JMeter output to from the above example.

The second parameter is destdir which is the output directory for the images and html produced by the task.

<jchav srcdir="${basedir}/test/results/" destdir="${basedir}/test/chavoutput/"

reporttitle="Digg Example Walk"/>