We think you will get the most benefit from using JChav alongside a continuous integration tool like

CruiseControl or

Continuum.

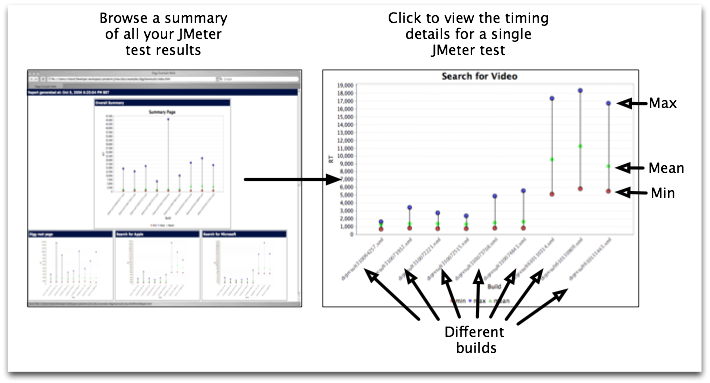

By integrating JChav into the automated build/test/deploy cycle you get the additional benefit of being able to see if the changes made are having a positive or negative effect on performance. Those changes are immediately available to all interested parties.

The typical build arrangement in ant/cruisecontrol we use is as follows :

So as soon as a developer commits a change to the source code repository an automated build is triggered. The normal ant build is run including any checkstyle constraints, JUnit tests etc. The build also deploys the application before triggering the JMeter scripts and performance chart generation through JChav. The notification via email to all the build participants contains a link to the generated charts.

Bringing performance monitoring into every cycle in a simple way stops any nasty suprises at a later date.

Trying to add performance testing to a large establish application looks unsurmountable, but ... one test at a time, seeing the graph going the right way will help to motivate you to adding more.